Blog / Vectorising Wikipidia into just 650MB

Vectorising Wikipidia into just 650MB

In our latest project, we've vectorised the entire English subset of Wikipedia into a compact 650MB file. Why? We're working on some exciting entity-related projects and needed a way to efficiently represent and query vast amounts of textual data. It turns out that this file could be useful for others, so we thought we'd share some details here.

Why vectorise Wikipedia?

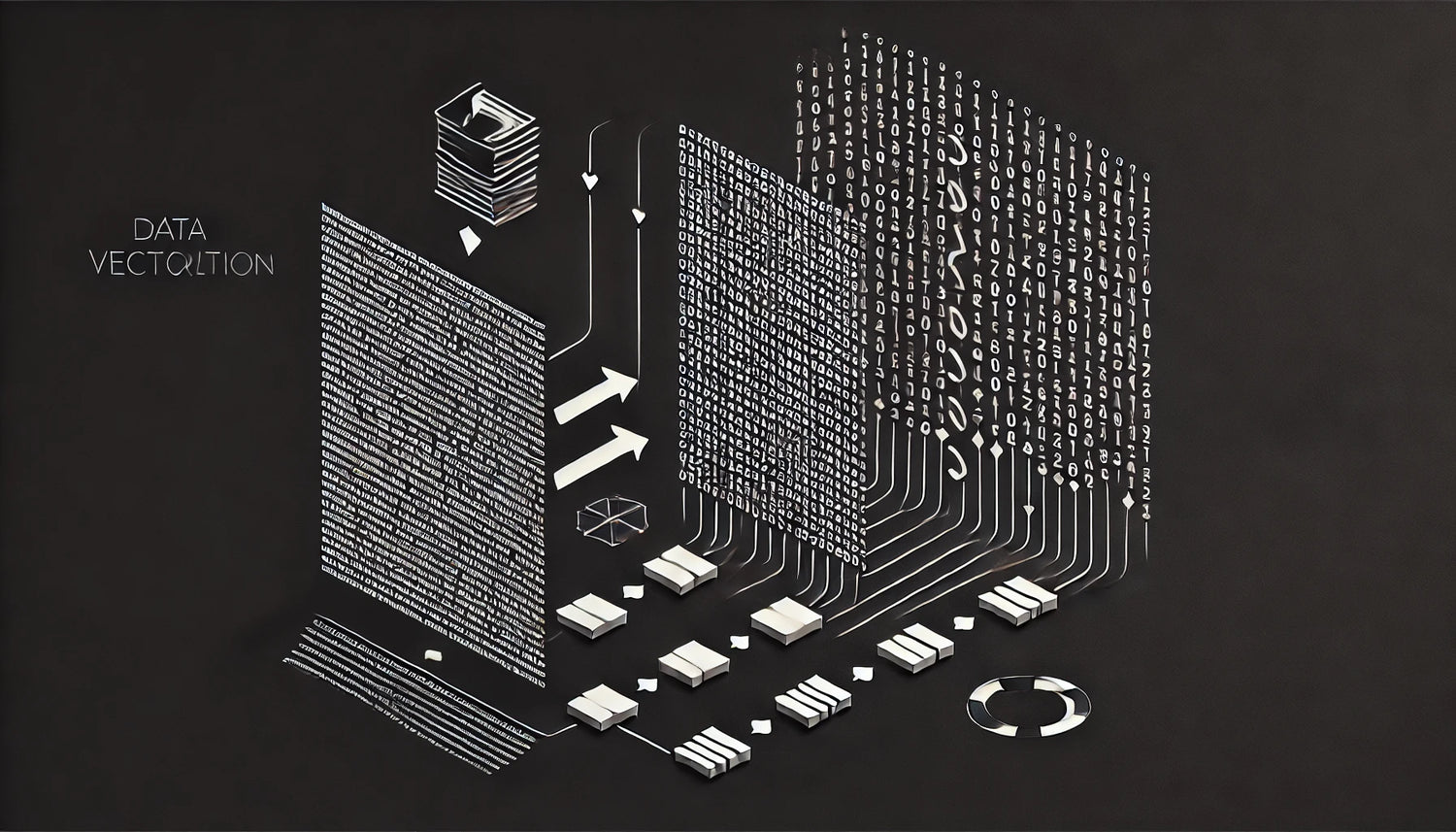

For those unfamiliar, vectorisation is the process of turning text into a numerical format that machines can understand and compute against. With the sheer amount of information housed in Wikipedia, creating a compact vectorised representation opens the door for a number of uses, particularly around natural language processing.

We've used the Snowflake/snowflake-arctic-embed-m-v1.5 model to extract dense, high-quality vectors—each with a full 768 dimensions. These vectors capture semantic information from the Wikipedia page text. The vectors are stored with full precision, meaning no data was sacrificed in the process.

How it works

We use an FAISS IVFPQ index with a FlatIP quantizer, chosen for its balance between speed and accuracy. FAISS, for those unfamiliar, is a popular library developed by Facebook for efficient similarity search.

- Index type: IVFPQ (Inverted File with Product Quantization)

- Quantizer: FlatIP (Inner Product Quantization)

- Nlists: 2048

- Nprobe: 128 (this determines how many partitions are scanned during a search)

When you query the index, it returns both the distances and the indices of the nearest neighbours in the vector space. The corresponding URLs are mapped to the same row number in the index.

Practical uses

While we've created this dataset for our own entity work, we realise it could be useful for other tasks. Whether you're in SEO, AI research, or data science, this compact representation of Wikipedia could streamline workflows in a number of ways.

Here's the link to download.